MUNICH According to Google CEO Sundar Pichai, the field's defenses against cyberspace security threats may be strengthened by the rapid advancements in artificial intelligence. Amid mounting worries over AI's potentially sinister applications, Pichai stated that these capabilities might benefit businesses and governments in identifying and neutralizing threats from hostile actors more quickly.

“We have good reason to be concerned about how this may affect cybersecurity. However, I believe that AI, paradoxically, helps our cybersecurity defense,” Pichai said to attendees at the Munich Security Conference at the end of last week. Cybersecurity attacks have been more frequent and sophisticated as a result of hostile actors using them more frequently to extort money and exercise power.

Cybersecurity Ventures, a cyber-research organization, estimates that by 2025, the cost of cyberattacks on the world economy will have increased to $10.5 trillion from an expected $8 trillion in 2023. According to a January assessment by the British National Cyber Security Centre, a division of GCHQ, the nation's intelligence agency, artificial intelligence (AI) would further heighten these risks by reducing the entrance barriers for cybercriminals and permitting a rise in harmful cyber activity, including ransomware assaults.

However, according to Pichai, AI is also reducing the amount of time it takes for defenders to recognize assaults and take appropriate action. He said that this would lessen the situation known as the “defender's dilemma,” in which cyber criminals must succeed just once to breach a system, but defenders must succeed every time to keep it safe.

“Those defending themselves benefit disproportionately from AI since it gives them a tool that may significantly affect the situation in contrast to those attempting to take advantage of it. Thus, we are sort of winning the race,” he remarked.

Google last week revealed a new project that aims to improve online security by investing in infrastructure and providing AI technologies. In a statement, the business claimed that a white paper outlines research and precautions as well as defines boundaries surrounding Artificial Intelligence (AI). A free, open-source application called Magika seeks to assist users in identifying malware, or dangerous software.

According to Pichai, the technologies are already being used in the company's internal systems and products, such as Gmail and Google Chrome. He stated, “Policymakers, security experts, and civil society have the opportunity to finally tip the cybersecurity balance from attackers to cyber defenders. AI is at a decisive crossroads.”

The announcement was made concurrently with the signing of an agreement by significant MSC businesses to take “reasonable precautions” to stop the deployment of AI tools to rig elections in 2024 and beyond.

Among the parties to the new agreement were Adobe, Amazon, Google, IBM, Meta, Microsoft, OpenAI, TikTok, and X. It also contains a framework for how businesses should react to “deepfakes” created by AI that are intended to trick voters.

It occurs at a time when the internet is becoming a more significant domain of influence for both private users and bad actors with state support. On Saturday, former US Secretary of State Hillary Clinton called the internet a “new battlefield." She declared in Munich hat “The technology arms race has just gone up another notch with generative AI.”

According to Microsoft research released last week, state-sponsored hackers from China, Iran, and Russia have been utilizing OpenAI's large language model (LLM) to improve their ability to deceive targets.

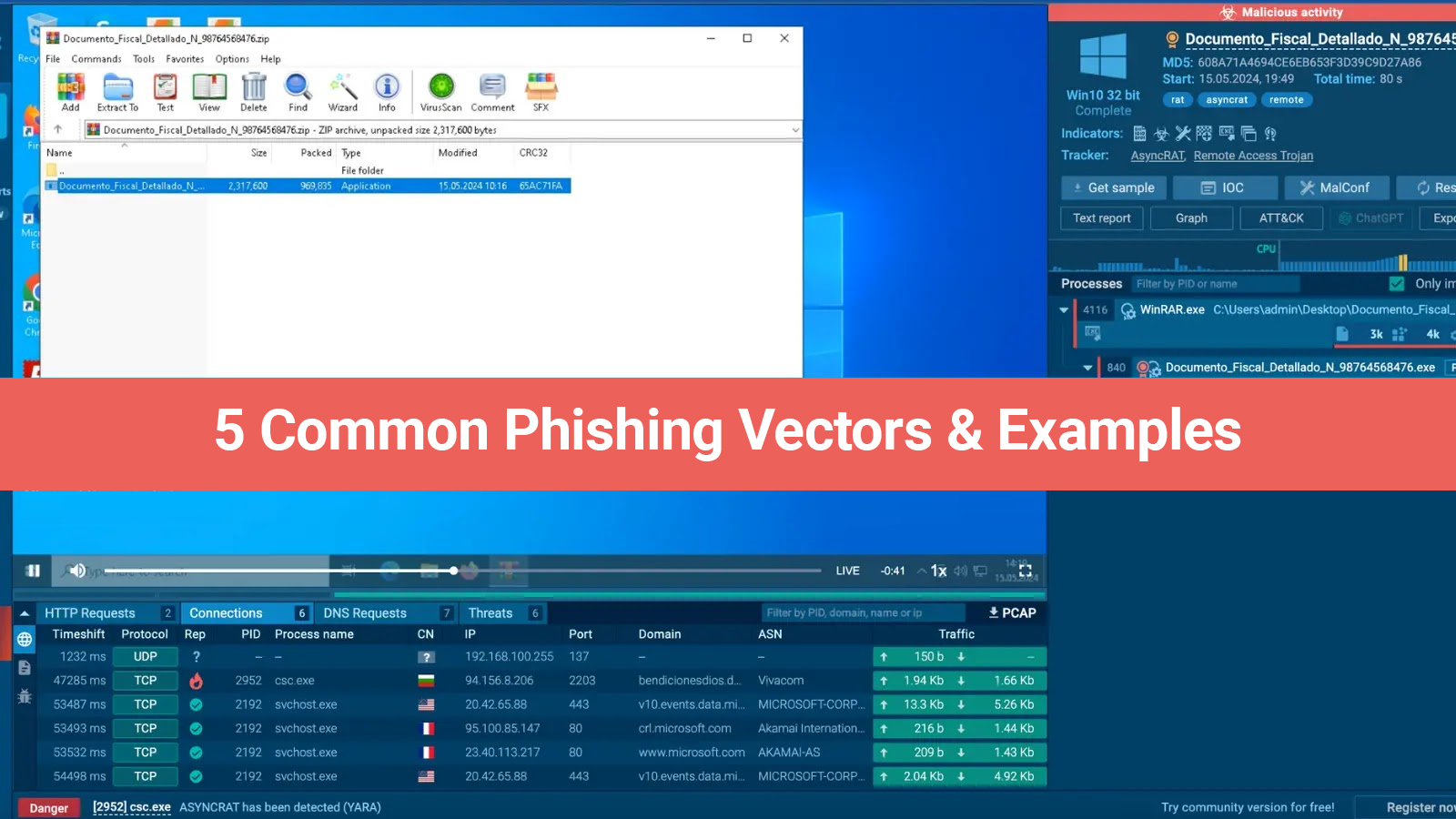

The tools were said to have been used by the Iranian Revolutionary Guard, the Chinese and North Korean governments, and Russian military intelligence. According to Mark Hughes, head of security at DXC Technology, an IT services and consulting company, malefactors are using WormGPT, a hacking tool modeled after ChatGPT, more frequently to perform activities like reverse engineering code, as reported by CNBC.

But he added that he was also witnessing "significant gains" from comparable systems that enable engineers to quickly identify and retaliate against engineer attacks. Hughes stated last week, "It allows us to accelerate." In cyberspace, the majority of the time you have is the same time the attackers have an advantage over you. That is frequently the case in any fight.